- 1 Post

- 53 Comments

6·29 days ago

6·29 days agoI’m a little slut for some hummus

Do you condom hummus?

10·1 month ago

10·1 month agoAntitrust is the right approach. (As opposed to copyright.) I hope Google gets decimated.

92·2 months ago

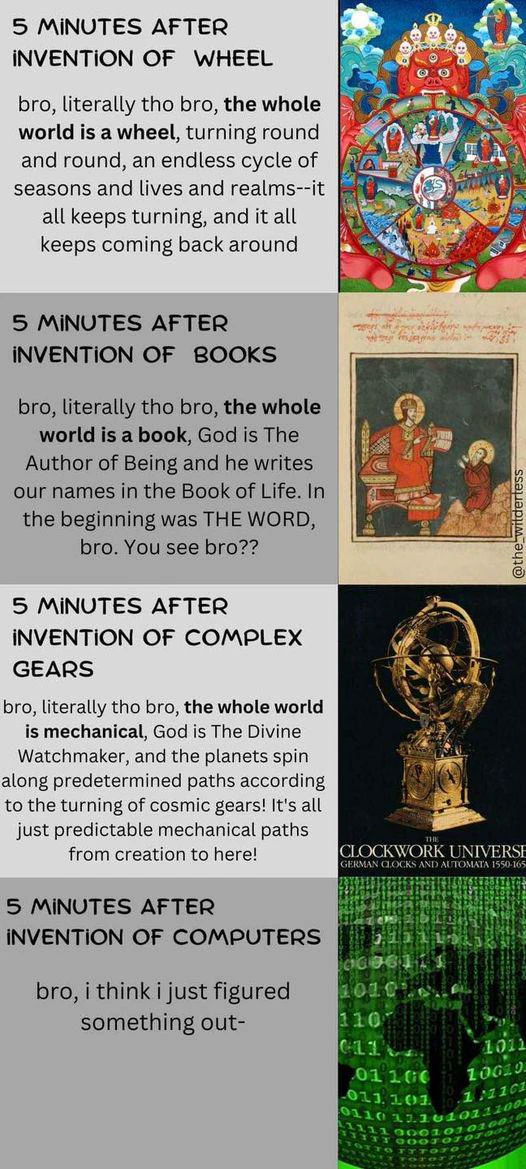

92·2 months agoIf you were born during the first industrial revolution, then you’d think the mind was a complicated machine. People seem to always anthropomorphize inventions of the era.

19·2 months ago

19·2 months agoCitation Need (by Molly White) also frequently bashes AI.

I like her stuff because, no matter how you feel about crypto, AI, or other big tech, you can never fault her reporting. She steers clear of any subjective accusations or prognostication.

It’s all “ABC person claimed XYZ thing on such and such date, and then 24 hours later submitted a report to the FTC claiming the exact opposite. They later bought $5 million worth of Trumpcoin, and two weeks later the FTC announced they were dropping the lawsuit.”

828·2 months ago

828·2 months agoI think one of the most toxic things on Lemmy is the prevalence of judging normies for using incredibly popular services and ascribing it to a character defect instead of life just being too complex for most people to be able to prioritize exploring more ethical technology choices.

4·2 months ago

4·2 months agoWe have mistaken rationality for a philosophy rather than a methodology, and efficiency for a virtue without any particular end in mind.

To have a unique, personal, subjective, divergent human experience is to sin against your prescribed algorithm.

111·2 months ago

111·2 months agoPolluting the sky in order to pollute the internet 👌

11·2 months ago

11·2 months agoYes, that’s a good addition.

Overall, my point was not that scraping is a universal moral good, but that legislating tighter boundaries for scraping in an effort to curb AI abuses is a bad approach.

We have better tools to combat this, and placing new limits on scraping will do collateral damage that we should not accept.

And at the very least, the portfolio value of Disney’s IP holdings should not be the motivating force behind AI regulation.

41·2 months ago

41·2 months agoI’d say that scraping as a verb implies an element of intent. It’s about compiling information about a body of work, not simply making a copy, and therefore if you can accurately call it “scraping” then it’s always fair use. (Accuse me of “No True Scotsman” if you would like.)

But since it involves making a copy (even if only a temporary one) of licensed material, there’s the potential that you’re doing one thing with that copy which is fair use, and another thing with the copy that isn’t fair use.

Take archive.org for example:

It doesn’t only contain information about the work, but also a copy (or copies, plural) of the work itself. You could argue (and many have) that archive.org only claims to be about preserving an accurate history of a piece of content, but functionally mostly serves as a way to distribute unlicensed copies of that content.

I don’t personally think that’s a justified accusation, because I think they do everything in their power to be as fair as possible, and there’s a massive public benefit to having a service like this. But it does illustrate how you could easily have a scenario where the stated purpose is fair use but the actual implementation is not, and the infringing material was “scraped” in the first place.

But in the case of gen AI, I think it’s pretty clear that the residual data from the source content is much closer to a linguistic analysis than to an internet archive. So it’s firmly in the fair use category, in my opinion.

Edit: And to be clear, when I say it’s fair use, I only mean in the strict sense of following copyright law. I don’t mean that it is (or should be) clear of all other legal considerations.

4012·2 months ago

4012·2 months agoI say this as a massive AI critic: Disney does not have a legitimate grievance here.

AI training data is scraping. Scraping is — and must continue to be — fair use. As Cory Doctorow (fellow AI critic) says: Scraping against the wishes of the scraped is good, actually.

I want generative AI firms to get taken down. But I want them to be taken down for the right reasons.

Their products are toxic to communication and collaboration.

They are the embodiment of a pathology that sees humanity — what they might call inefficiency, disagreement, incoherence, emotionality, bias, chaos, disobedience — as a problem, and technology as the answer.

Dismantle them on the basis of what their poison does to public discourse, shared knowledge, connection to each other, mental well-being, fair competition, privacy, labor dignity, and personal identity.

Not because they didn’t pay the fucking Mickey Mouse toll.

91·3 months ago

91·3 months agoIf it’s shit, that’s bad.

If it’s the shit, that’s good.

91·3 months ago

91·3 months agoHow about robots that heal people?

So if library users stop communicating with each other and with the library authors, how are library authors gonna know what to do next? Unless you want them to talk to AIs instead of people, too.

At some point, when we’ve disconnected every human from each other, will we wonder why? Or will we be content with the answer “efficiency”?

3·3 months ago

3·3 months agoThe process is supposed to be sustainable. That doesn’t mean you can take one activity and do it to the exclusion of all others and have that be sustainable.

Edit:

Also, regretably, I’m using the now-common framing where “agile” === Scrum.

If we wanna get pure about it, the manifesto doesn’t say anything about sprints. (And also, you don’t do agile… you do a process which is agile. It’s a set of criteria to measure a process against, not a process itself.)

And reasonable people can definitely assert that Scrum does not meet all the criteria in the agile manifesto — at least, as Scrum is usually practiced.

5·3 months ago

5·3 months agoIt’s funny (or depressing), because the original concept of agile is very well aligned with an open source/inner source philosophy.

The whole premise of a sprint is supposed to be that you move quickly and with purpose for a short period of time, and then you stop and refactor and work on your tools or whatever other “non value-add” stuff tends to be neglected by conventional deliverable-focused processes.

The term “sprint” is supposed to make it clear that it’s not a sustainable 100%-of-the-time every-single-day pace. It’s one mode of many.

Buuuut that’s not how it turned out, is it?

132·3 months ago

132·3 months agoSo what counts as dictating my life?

The government prohibiting me from firing my gun in the air, or my neighbor’s falling bullets prohibiting me from leaving my porch?

I’m always suspect of those who assume there is only “freedom to do” and not also “freedom from being done-to”.

They tend to think they will never be on the receiving end of someone else’s “freedom”.

4·3 months ago

4·3 months agoAlso the people who do the tagging and feedback for training tend to be underpaid third-world workers.

66·3 months ago

66·3 months agoAnother day, another speculative execution vulnerability.

So like, when do we get a government-run service to issue zero-knowledge proofs about us so companies have no reason to store stuff like this in the first place?